Overview

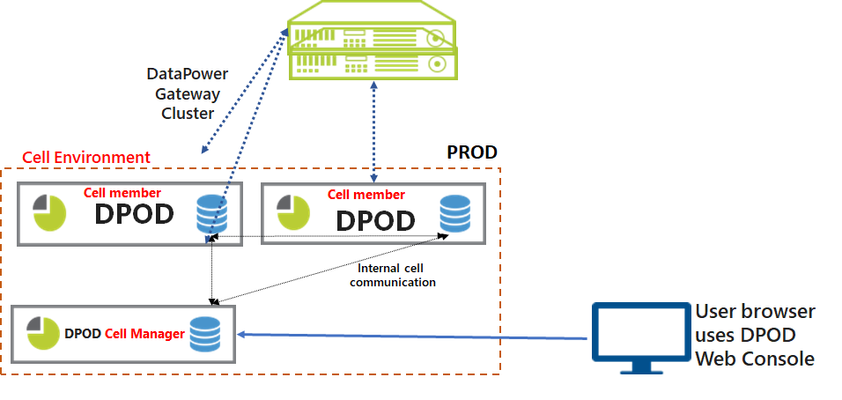

The cell environment (also referred as "federated environment") distribute DPOD's Store and DPOD's processing (using DPOD's agents) across different federated servers, in order to handle high loads of transactions rate (thousands of transactions per seconds).

The cell has two main components:

- Cell Manager - a DPOD server (virtual or physical) that manages all Federated Cell Members (FCMs) as well as provides central DPOD services such as the Web Console, reports, alerts, etc.

- Federated Cell Member (FCM) - a DPOD server (usually physical with local high speed storage) that includes Store data nodes and agents (Syslog and WS-M) for collecting, parsing and storing data. There could be one or more federated cell members per cell.

See the following diagram:

The following procedure describes the process of establishing a DPOD cell environment.

Prerequisites

- DPOD cell manager and federated cell members must be with the same version (minimum version is v1.0.8.5).

- DPOD cell manager can be installed in both Appliance Mode or Non-Appliance Mode with Medium Load architecture type, as detailed in the Hardware and Software Requirements. The manager server can be both virtual or physical.

- DPOD federated cell member (FCM) should be installed in Non-appliance Mode with High_20dv with High Load architecture type, as detailed in the Hardware and Software Requirements.

- Each cell component (manager / FCM) should have two network interfaces:

- External interface - for DPOD users to access the Web Console and for communication between DPOD and Monitored Gateways.

- Internal interface - for internal DPOD components inter-communication (should be a 10Gb Ethernet interface).

- Network ports should be opened in the network firewall as detailed below:

From | To | Ports (Defaults) | Protocol | Usage |

|---|---|---|---|---|

DPOD Cell Manager | Each Monitored Device | 5550 (TCP) | HTTP/S | Monitored device administration management interface |

DPOD Cell Manager | DNS Server | TCP and UDP 53 | DNS | DNS services. Static IP address may be used. |

DPOD Cell Manager | NTP Server | 123 (UDP) | NTP | Time synchronization |

DPOD Cell Manager | Organizational mail server | 25 (TCP) | SMTP | Send reports by email |

DPOD Cell Manager | LDAP | TCP 389 / 636 (SSL). TCP 3268 / 3269 (SSL) | LDAP | Authentication & authorization. Can be over SSL. |

| DPOD Cell Manager | Each DPOD Federated Cell Member | 9300-9305 (TCP) | ElasticSearch | ElasticSearch Communication (data + management) |

NTP Server | DPOD Cell Manager | 123 (UDP) | NTP | Time synchronization |

Each Monitored Device | DPOD Cell Manager | 60000-60003 (TCP) | TCP | SYSLOG Data |

Each Monitored Device | DPOD Cell Manager | 60020-60023 (TCP) | HTTP/S | WS-M Payloads |

Users IPs | DPOD Cell Manager | 443 (TCP) | HTTP/S | IBM DataPower Operations Dashboard Web Console |

Admins IPs | DPOD Cell Manager | 22 (TCP) | TCP | SSH |

| Each DPOD Federated Cell Member | DPOD Cell Manager | 9200, 9300-9400 | ElasticSearch | ElasticSearch Communication (data + management) |

Each DPOD Federated Cell Member | DNS Server | TCP and UDP 53 | DNS | DNS services |

Each DPOD Federated Cell Member | NTP Server | 123 (UDP) | NTP | Time synchronization |

NTP Server | Each DPOD Federated Cell Member | 123 (UDP) | NTP | Time synchronization |

Each Monitored Device | Each DPOD Federated Cell Member | 60000-60003 (TCP) | TCP | SYSLOG Data |

Each Monitored Device | Each DPOD Federated Cell Member | 60020-60023 (TCP) | HTTP/S | WS-M Payloads |

Admins IPs | Each DPOD Federated Cell Member | 22 (TCP) | TCP | SSH |

Cell Manager Installation

Prerequisites

- DPOD cell manager can be installed in both Appliance Mode or Non-Appliance Mode with Medium Load architecture type, as detailed in the Hardware and Software Requirements. The manager server can be both virtual or physical.

Installation

Install DPOD as described in one of the following installation procedures:

- Appliance Mode: Installation procedure

- Non-appliance Mode: Installation procedure

As described in the prerequisites section, the cell manager should have two network interfaces.

When installing DPOD, the user is prompted to choose the IP address for the Web Console - this should be the IP address of the external network interface.

Federated Cell Member Installation

The following section describes the installation process of a single Federated Cell Member (FCM). User should repeat the procedure for every FCM installation.

Prerequisites

- DPOD federated cell member (FCM) should be installed in Non-appliance Mode with High_20dv with High Load architecture type, as detailed in the Hardware and Software Requirements.

- The following software packages (RPMs) should be installed: iptables, iptables-services, numactl

- The following software packages (RPMs) are recommended for system maintenance and troubleshooting, but are not required: telnet client, net-tools, iftop, tcpdump

Installation

DPOD installation

- Install DPOD in Non-Appliance Mode: Installation procedure

As described in the prerequisites section, the federated cell member should have two network interfaces.

When installing DPOD, the user is prompted to choose the IP address for the Web Console - this should be the IP address of the external network interface (although the FCM does not run the Web Console service).

- After DPOD installation is complete, the user should execute the following operating system performance optimization script:

/app/scripts/tune-os-parameters.sh

User should reboot the server for the new performance optimization to take effect.

Prepare Cell Member for Federation

Prepare mount points

The cell member is usually "bare metal" server with NVMe disks for maximizing server throughput.

Each of the Store's logical node (service) will be bound to a specific physical processor , disks and memory (using NUMA technology → Non-uniform memory access ).

The default cell member configuration assume 6 NVMe disks which will serve 3 Store data nodes (2 disks per node)

The following OS mount points should be configured by the user before federating the DPOD installation to "cell member".

We highly recommend the use of LVM (Logical volume Manager) to allow "flexible" storage for future storage needs .

note - colored table cells should be completed by the user based on his specific hardware.

| Store Node | mount point path | Disk Bay | Disk Serial | Disk Path | CPU No |

|---|---|---|---|---|---|

| 2 | /data2 | ||||

| 2 | /data22 | ||||

| 3 | /data3 | ||||

| 3 | /data33 | ||||

| 4 | /data4 | ||||

| 4 | /data44 |

How to identify Disk OS path and Disk serial

- To identify which of the server's NVMe disk bay is bound to which of the CPU use the hardware manufacture documentation.

Also, write down the disk's serial number by visually observing the disk. In order to identify the disk os path (example : /dev/nvme01n) and the disk serial the user should install the NVMe disk utility software provided by the hardware supplier. Example : for Intel based NVMe SSD disks install the "Intel® SSD Data Center Tool" (isdct).

Example output of the Intel SSD DC tool :isdct show -intelssd - Intel SSD DC P4500 Series PHLE822101AN3PXXXX - Bootloader : 0133 DevicePath : /dev/nvme0n1 DeviceStatus : Healthy Firmware : QDV1LV45 FirmwareUpdateAvailable : Please contact your Intel representative about firmware update for this drive. Index : 0 ModelNumber : SSDPE2KE032T7L ProductFamily : Intel SSD DC P4500 Series SerialNumber : PHLE822101AN3PXXXX

- Use the disks bay number and the disk serial number (visually identified) and correlate with the output of the disk tool to identify the disk os path.

Examples for Mount Points and Disk Configurations

| Store Node | mount point path | Disk Bay | Disk Serial | Disk Path | CPU No |

|---|---|---|---|---|---|

| 2 | /data2 | 1 | PHLE822101AN3PXXXX | /dev/nvme0n1 | 1 |

| 2 | /data22 | 2 | /dev/nvme1n1 | 1 | |

| 3 | /data3 | 4 | /dev/nvme2n1 | 2 | |

| 3 | /data33 | 5 | /dev/nvme3n1 | 2 | |

| 4 | /data4 | 12 | /dev/nvme4n1 | 3 | |

| 4 | /data44 | 13 | /dev/nvme5n1 | 3 |

Example for LVM Configuration

pvcreate -ff /dev/nvme0n1 vgcreate vg_data2 /dev/nvme0n1 lvcreate -l 100%FREE -n lv_data vg_data2 mkfs.xfs -f /dev/vg_data2/lv_data pvcreate -ff /dev/nvme1n1 vgcreate vg_data22 /dev/nvme1n1 lvcreate -l 100%FREE -n lv_data vg_data22 mkfs.xfs /dev/vg_data22/lv_data

The /etc/fstab file :

/dev/vg_data2/lv_data /data2 xfs defaults 0 0 /dev/vg_data22/lv_data /data22 xfs defaults 0 0 /dev/vg_data3/lv_data /data3 xfs defaults 0 0 /dev/vg_data33/lv_data /data33 xfs defaults 0 0 /dev/vg_data4/lv_data /data4 xfs defaults 0 0 /dev/vg_data44/lv_data /data44 xfs defaults 0 0

Example for the final configuration for 3 Store's nodes

Not including other mount points needed as describe on DPOD Hardware and Software Requirements

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT nvme0n1 259:0 0 2.9T 0 disk └─vg_data2-lv_data 253:6 0 2.9T 0 lvm /data2 nvme1n1 259:5 0 2.9T 0 disk └─vg_data22-lv_data 253:3 0 2.9T 0 lvm /data22 nvme2n1 259:1 0 2.9T 0 disk └─vg_data3-lv_data 253:2 0 2.9T 0 lvm /data3 nvme3n1 259:2 0 2.9T 0 disk └─vg_data33-lv_data 253:5 0 2.9T 0 lvm /data33 nvme4n1 259:4 0 2.9T 0 disk └─vg_data44-lv_data 253:7 0 2.9T 0 lvm /data44 nvme5n1 259:3 0 2.9T 0 disk └─vg_data4-lv_data 253:8 0 2.9T 0 lvm /data4

Prepare local OS based firewall

Most Linux based OS uses local firewall service (iptables / firewalld).

The OS for "non Appliance Mode" DPOD installation is provided by the user and its the user's responsibility to allow needed connectivity to and from the server.

User should make sure needed connectivity detailed on table 1 is allowed on the OS local firewall service.

When using DPOD "Appliance" mode installation for the cell manager, local OS based firewall service is handled by the cell member federation script.

Cell Member Federation

In order to federate and configure the cell member run the following script on in the cell manager once per cell member - e.g. if you want to add twocell members, run the script twice (in the cell manager), first time with the IP address of the first cell member, and second time with the IP address of the second cell manager.

impotent : the command should be executed using the os "root" user.

/app/scripts/configure_federated_cluster_manager.sh -a <internal IP address of the cell member> -g <external IP address of the cell member> For example: /app/scripts/configure_federated_cluster_manager.sh -a 172.18.100.34 -g 172.17.100.33

Example for a successful execution - note that the script writes two log file, one in the cell manager and one in the cell member, the log file names are mentioned in the script's output.- TODO

Example for a failed execution, you will need to check the log file for further information.

in case of a failure, the script will try to rollback the configuration changes it made, so you can try to fix the problem and run it again. - TODO

Cell Member Federation Post Steps

NUMA configuration

DPOD cell member is using NUMA technology ( Non-uniform memory access).

Default cell manager configuration bound DPOD's agent to CPU 0 and the Store's nodes to CPU 1.

If the server has 4 CPUs user should edit node 2-3 service file and change the bind CPU to 2 and 3 respectively.

Identify NUMA configuration

To identify the amount of CPU installed on the server use the NUMA utility :

numactl -s Example output for 4 CPU server : policy: default preferred node: current physcpubind: 0 1 2 3 4 5 6 7 8 9 10 11 12 cpubind: 0 1 2 3 nodebind: 0 1 2 3 membind: 0 1 2 3

Alter store's node 3-4

OPTIONAL - if the server has 4 CPU alter the Store's nodes service file to bound each node to different CPU.

The services files are located on directory /etc/init.d/ with the name MonTier-es-raw-trans-Node-2 and MonTier-es-raw-trans-Node-3.

For node MonTier-es-raw-trans-Node-2 OLD VALUE : numa="/usr/bin/numactl --membind=1 --cpunodebind=1" NEW VALUE : numa="/usr/bin/numactl --membind=2 --cpunodebind=2" For node MonTier-es-raw-trans-Node-3 OLD VALUE : numa="/usr/bin/numactl --membind=1 --cpunodebind=1" NEW VALUE : numa="/usr/bin/numactl --membind=3 --cpunodebind=3"

Cell Member Federation Verification

After a successful execution, you will be able to see the new cell member in the Manage → System → Nodes page,

For example, if we added two cell members:

Also, the new agents will be shown in the agents in the Manage → Internal Health → Agents page.

For example, if we have one cell manager with two agents and one cell members with four agents , the page will show six agents:

Configure The Monitored Device to Remote Collector's Agents

It is possible to configure entire monitored device to remote collector's agent or just a specific domain.

To configure monitored device / specific domain please follow instructions on Adding Monitored Devices